Commonwealth Cyber Initiative funds seven projects to curb the spread of misinformation campaigns

August 3, 2021

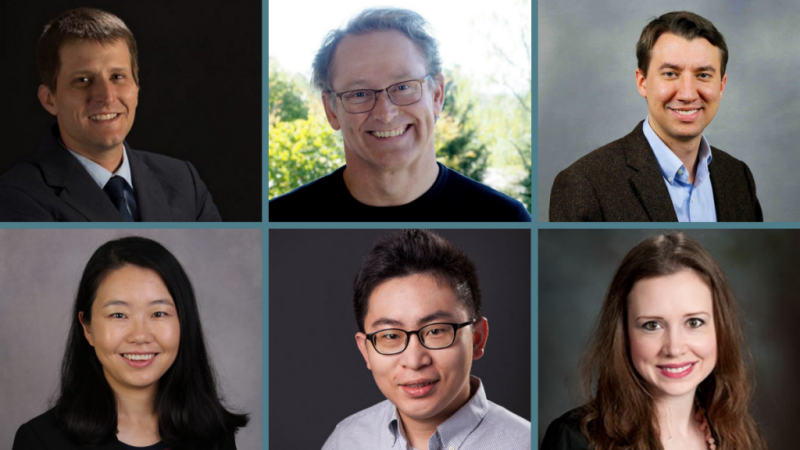

The Commonwealth Cyber Initiative (CCI) has funded seven transdisciplinary projects for $450,000 to explore the role of cybersecurity in curbing the spread of disinformation and misinformation.

“Misinformation and disinformation spreads quickly in our connected society and prevents people from making decisions based on accurate information,” said Luiz DaSilva, CCI executive director. “We want to study how cybersecurity can help control the proliferation of these misinformation campaigns. Researchers are applying artificial intelligence techniques to detect the spread of misinformation through social media, to provide tools for governments and individuals to counteract disinformation campaigns, and to analyze how disinformation can impact connected devices and vehicles.”

Researchers from Virginia Tech, George Mason University, Old Dominion University, Tidewater Community College, and the University of Virginia are collaborating on the projects. Researchers represent a wide range of disciplines, such as engineering, computer science, philosophy and religious studies, policy and government, political science, communication, geography, and psychology.

The funded projects are:

Exploring the Impact of Human-AI Collaboration on Open Source Intelligence Investigations of Social Media Disinformation

Principal Investigator: Kurt Luther, associate professor, computer science, Virginia Tech

Co-Principal Investigators: Aaron Brantly, assistant professor, political science; David Hicks, professor, education; both Virginia Tech

Project description: Open Source Intelligence investigation — the collection and analysis of publicly available information sources, such as social media, for investigative purposes — is a powerful method for combating disinformation and misinformation. However, this investigation technique is typically a manual, time-consuming process, overwhelming investigators with a fire hose of online disinformation. Crowdsourcing, with its ability to massively parallelize data analysis and provide access to highly specialized knowledge, has the potential to assist the disinformation investigation. Researchers will build a pipeline where an expert investigator leverages AI-assisted tools to narrow the search, enlists crowdsourcing to refine the shortlist, and reviews and confirms the results. Inspired by the model of volunteer fire departments, the researchers will organize and evaluate a series of collaborative capture-the-flag events where teams build and deploy new AI-assisted tools to discover and analyze disinformation campaigns. The goal is to provide scalable support for local, state, and federal authorities in fighting disinformation and misinformation.

Fast Deployment of AI-based Disinformation Detector

Principal Investigator: Ruoxi Jia, assistant professor, electrical and computer engineering, and faculty at the Sanghani Center for Artificial Intelligence and Data Analytics, Virginia Tech

Co-Principal Investigators: Jia-Bin Huang, assistant professor, electrical and computer engineering, and faculty at the Sanghani Center for Artificial Intelligence and Data Analytics; Adrienne Ivory, associate professor, communication; both Virginia Tech

Project description: Responding rapidly to disinformation attacks is critical to minimizing the damage caused by these attacks. As fake news is intentionally written to mislead readers during a short time period, it is difficult for a human editor to differentiate between real and fake news. For this project, researchers plan to enable fast deployment of artificial intelligence (AI)-based disinformation detection tools. To do so, researchers must build a large set of training data to train the AI-based detection tool. Researchers will create techniques to strategically label valuable data by looking at different sets of features such as new content, user profiles, message propagation, and social context while learning from this information and mitigating the effects of potential labeling noise on detection models. In addition, the researchers will evaluate public opinion about AI-based detection methods because a better understanding of public perception can help inform social media companies about using disinformation detection to block content.

Machine Learning-based Disinformation Detection and Its Human Trust in Autonomous Vehicle Systems

Principal Investigator: Haiying Shen, associate professor, computer science, University of Virginia

Co-Principal Investigator: Michael Gorman, professor, engineering systems and the environment, University of Virginia

Project description: Future transportation could become a distributed system of connected autonomous vehicles, such as self-driving cars, and other related components. Vehicle-to-vehicle (V2V) communication can make vehicles vulnerable to attacks. Hackers can insert false information into the system so drivers think everything is OK, but in reality an accident is about to happen. Current solutions to preventing the fault information attacks may be too slow. At the same time, human drivers need to be able to trust in the system that’s behind the wheel. Adoption and acceptance of V2V depends on it. To solve this problem, this project will design a machine learning-based disinformation detection system and also perform a study about human trust in the system.

Designing Tools for Proactive Counter-Disinformation Communication to Empower Local Government Agencies

Principal Investigator: Hemant Purohit, assistant professor, information sciences and technology, George Mason University

Co-Principal Investigators: Antonios Anastasopoulos, assistant professor, computer science, George Mason; Tonya Neaves, principal/managing partner, Delta Point Solutions LLC; Huzefa Rangwala, professor, computer science, George Mason

Project description: During a crisis, government agencies are especially vulnerable to targeted disinformation campaigns on social media. A multidisciplinary team of computing and policy researchers is addressing this challenge through three avenues. First, researchers will create a program to characterize disinformation social media posts and show how these posts are connected and what they have in common. Second, using computation methods that employ state-of-the-art natural language processing and machine learning techniques, they’ll detect multilingual disinformation posts. Finally, researchers will combine the results to create an interactive and predictive modeling tool, which could help discover malicious social media attacks on government policies before they have a chance to take root and spread. The project team will use the disinformation social media posts during the COVID-19 pandemic to test their new method. The application of this research could proactively aid local government agencies in their future emergency and crisis management efforts.

Question-Under-Discussion Framework to Analyze Misinformation Targeting Security Researcher

Principal Investigator: Sachin Shetty, associate professor, computational modelling and simulation engineering, Old Dominion University

Co-Principal Investigator: Teresa Kissel, assistant professor, philosophy and religious studies, Old Dominion University

Project description: In recent times, adversaries have used social engineering campaigns to target security researchers who work on vulnerability research and development. Through these campaigns, adversaries aim to learn more about nonpublic vulnerabilities that could be used in potential state-sponsored attacks. The researchers will investigate the effectiveness of the question-under-discussion framework to analyze social media communication between security researchers and/or adversaries and report messages that indicate misinformation. The researchers will evaluate the effectiveness of the framework by implementing it in a natural language processing tool developed by the team for cybersecurity research.

The Acceptance and Effectiveness of Explainable AI-Powered Credibility Labeler for Scientific misinformation and Disinformation

Principal Investigator: Jian Wu, assistant professor, computer science, Old Dominion University

Co-Principal Investigators: Jeremiah Still, assistant professor, psychology, Jian Li, professor, electrical and computer engineering; both Old Dominion University

Project description: Fake scientific news has been spreading across the internet for years, but even more so since COVID-19, prompting such fake stories as “mosquitoes spread coronavirus.” It may be easier for people to believe these pieces of news since they seem intuitively correct. Will they change their mind if they see the evidence against these news articles from scientific literature? Do they trust the credibility scores estimated by artificial intelligence algorithms? Will they still pass these news articles to their friends on Facebook? To find some answers, computer scientists will collaborate with psychologists at Old Dominion University. The project consists of three steps. First, a new algorithm will be researched and implemented to estimate how likely a scientific news article reveals the truth. The algorithm will also provide evidence from scientific publications. Then, a website will be built to show users what is found in the first step. Finally, a study will be performed on news consumers to see their reactions when they are shown fake news articles and debunk information on the website we built. The hope is to reveal the effectiveness of using scientific literature as weapons to debunk scientific misinformation and disinformation, eventually curb their spread across society.

Artificial Intelligence-Based Analysis of Misinformation and Disinformation Efforts from Mass Media and Social Media in Creating Anti-U.S. Perceptions

Principal Investigator: Hamdi Kavak, assistant professor, computational and data sciences, George Mason University

Co-Principal Investigators: Saltuk Karahan, lecturer and program coordinator, political science and geography, Old Dominion University; Hongyi Wu, director, School of Cybersecurity, Old Dominion University; Kimberly Perez, professor, information systems technology, Tidewater Community College

Project description: Online disinformation and misinformation campaigns can target the reputation of the United States overseas and affect foreign policy. For this project, researchers will use artificial intelligence to investigate such misinformation/disinformation campaigns, in particular how they may generate anti-U.S. public perception in other countries. The project will rely on data from Turkey, a key U.S. ally and NATO member. The project will collect data from various social media and news sources, analyze the trends using AI techniques, and understand the roles of several actors in shaping public opinion by using misinformation/disinformation campaigns. The results could show how negative social media campaigns impact foreign policy.

Written by Michele McDonald